Facial Recognition Technology: Federal Law Enforcement Agencies Should Better Assess Privacy and Other Risks

Fast Facts

We surveyed 42 federal agencies that employ law enforcement officers about their use of facial recognition technology.

- 20 reported owning such systems or using systems owned by others

- 6 reported using the technology to help identify people suspected of violating the law during the civil unrest, riots, or protests following the death of George Floyd in May 2020

- 3 acknowledged using it on images of the U.S. Capitol attack on Jan. 6

- 15 reported using non-federal systems

We recommended that 13 agencies track employee use of non-federal systems and assess the risks these systems can pose regarding privacy, accuracy, and more.

Highlights

What GAO Found

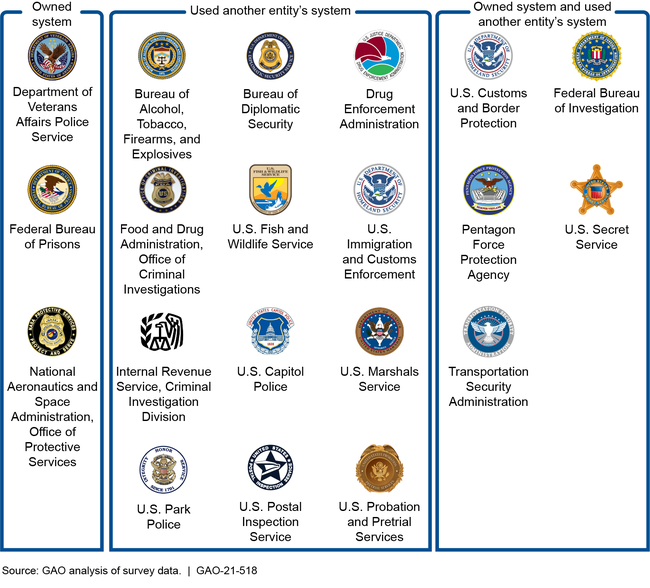

GAO surveyed 42 federal agencies that employ law enforcement officers about their use of facial recognition technology. Twenty reported owning systems with facial recognition technology or using systems owned by other entities, such as other federal, state, local, and non-government entities (see figure).

Ownership and Use of Facial Recognition Technology Reported by Federal Agencies that Employ Law Enforcement Officers

Note: For more details, see figure 2 in GAO-21-518.

Agencies reported using the technology to support several activities (e.g., criminal investigations) and in response to COVID-19 (e.g., verify an individual's identity remotely). Six agencies reported using the technology on images of the unrest, riots, or protests following the death of George Floyd in May 2020. Three agencies reported using it on images of the events at the U.S. Capitol on January 6, 2021. Agencies said the searches used images of suspected criminal activity.

All fourteen agencies that reported using the technology to support criminal investigations also reported using systems owned by non-federal entities. However, only one has awareness of what non-federal systems are used by employees. By having a mechanism to track what non-federal systems are used by employees and assessing related risks (e.g., privacy and accuracy-related risks), agencies can better mitigate risks to themselves and the public.

Why GAO Did This Study

Federal agencies that employ law enforcement officers can use facial recognition technology to assist criminal investigations, among other activities. For example, the technology can help identify an unknown individual in a photo or video surveillance.

GAO was asked to review federal law enforcement use of facial recognition technology. This report examines the 1) ownership and use of facial recognition technology by federal agencies that employ law enforcement officers, 2) types of activities these agencies use the technology to support, and 3) the extent that these agencies track employee use of facial recognition technology owned by non-federal entities.

GAO administered a survey questionnaire to 42 federal agencies that employ law enforcement officers regarding their use of the technology. GAO also reviewed documents (e.g., system descriptions) and interviewed officials from selected agencies (e.g., agencies that owned facial recognition technology). This is a public version of a sensitive report that GAO issued in April 2021. Information that agencies deemed sensitive has been omitted.

Recommendations

GAO is making two recommendations to each of 13 federal agencies to implement a mechanism to track what non-federal systems are used by employees, and assess the risks of using these systems. Twelve agencies concurred with both recommendations. U.S. Postal Service concurred with one and partially concurred with the other. GAO continues to believe the recommendation is valid, as described in the report.

Recommendations for Executive Action

| Agency Affected | Recommendation | Status |

|---|---|---|

| Bureau of Alcohol, Tobacco, Firearms and Explosives | The Director of the Bureau of Alcohol, Tobacco, Firearms and Explosives should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 1) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the Bureau of Alcohol, Tobacco, Firearms and Explosives (ATF) used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended that ATF implement a mechanism to track what non-federal systems with facial recognition technology are used by employes to support investigative activities. A December 2023 Department of Justice (DOJ) interim policy requires components, including ATF, to implement a tracking mechanism for the use of facial recognition systems. In June 2025, ATF officials told us they had been drafting an ATF facial recognition technology policy that would include a tracking mechanism requirement; however, the effort is on hold pending guidance from the new administration. As of June 2025, we consider this recommendation partially addressed, and will continue to monitor for ATF's implementation of a tracking mechanism.

|

| Bureau of Alcohol, Tobacco, Firearms and Explosives | The Director of the Bureau of Alcohol, Tobacco, Firearms and Explosives should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 2) |

As of June 2025, ATF has not yet conducted a risk assessment of its use of non-federal systems, and as such, this recommendation remains open.

|

| Drug Enforcement Administration | The Administrator for the Drug Enforcement Administration should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 3) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the Drug Enforcement Administration (DEA) used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended that DEA implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. A December 2023 Department of Justice (DOJ) interim policy requires components, including DEA, to implement a tracking mechanism for the use of facial recognition systems. In May 2024, DEA reported that it was in the process of finalizing an interim DEA policy for the use of facial recognition technology, and ensuring its compliance with the DOJ policy. However, in June 2025, DEA reported that implementation of a final DOJ policy was placed on hold, and as a result DEA paused its process of drafting its own interim policy. The agency said when DOJ resumes its efforts or otherwise gives its components policy direction, DEA will resume creating a policy that will outline the framework for usage of facial recognition technology, including a tracking mechanism for non-federal systems used to support investigative efforts. As of June 2025, we consider this recommendation partially addressed, and will continue to monitor for DEA's implementation of a tracking mechanism.

|

| Drug Enforcement Administration | The Administrator for the Drug Enforcement Administration should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 4) |

As of June 2025, DEA has not yet conducted a risk assessment of its use of non-federal systems, and as such, this recommendation remains open.

|

| Federal Bureau of Investigation | The Director of the FBI should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 5) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the FBI used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended the FBI implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In August 2022, FBI issued a facial recognition procurement, tracking, and evaluation policy directive. The directive requires all FBI personnel to coordinate facial recognition system procurement with FBI's Science and Technology Branch, which is responsible for the tracking and evaluation of the systems. As a result, the agency will have better visibility into the facial recognition systems its employees use, and be better positioned to assess the risks of technologies it uses, to support criminal investigations.

|

| Federal Bureau of Investigation | The Director of the FBI should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 6) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ federal law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the Federal Bureau of Investigation used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using, or assess the risks of using such systems. Consequently, we recommended the agency, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. In June 2025, the agency provided documents that included assessments of risks, including privacy and accuracy risks, for their use of multiple commercial facial recognition systems. As a result, the agency will be better positioned to understand and potentially mitigate the risks of using non-federal facial recognition technology.

|

| United States Marshals Service | The Director of the U.S. Marshals Service should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 7) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Marshals Service used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended that the Marshals Service implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In October 2022, the agency communicated to all its district offices that employees may only use facial recognition technology that the agency's Office of General Counsel and Investigative Operations Division have approved. In addition, when employees submit requests to use facial recognition technology, the agency will track those requests and authorizations. As a result, this recommendation is closed as implemented.

|

| United States Marshals Service | The Director of the U.S. Marshals Service should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 8) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ federal law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Marshals Service used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using, or assess the risks of using such systems. Consequently, we recommended the agency, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. The agency provided an Initial Privacy Assessment it completed for its use of a commercial facial recognition system. As part of the assessment, the agency has identified risks associated with use of the technology. As a result, the agency will be better positioned to understand and potentially mitigate the risks of using non-federal facial recognition technology.

|

| United States Customs and Border Protection | The Commissioner of CBP should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 9) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Customs and Border Protection (CBP) used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended that CBP implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In June 2022, CBP issued a policy directive for IT systems using personally identifiable information, including facial recognition technology. This policy requires all personnel to notify the CBP Privacy Office regarding the implementation, or proposed implementation, of such technologies. CBP Privacy Office documentation is to include a general description of the technology, what personally identifiable information is collected, and from whom, and how that information will be used or retained. As a result, this recommendation is closed as implemented.

|

| United States Customs and Border Protection | The Commissioner of CBP should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 10) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ federal law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Customs and Border Protection used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using, or assess the risks of using such systems. Consequently, we recommended the agency, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. In November 2024, the agency provided a Privacy Impact Assessment for its use of two commercial facial recognition systems. As part of the assessment, the agency has identified risks associated with use of the technology, including privacy and accuracy-related risks. As a result, the agency will be better positioned to understand and potentially mitigate the risks of using non-federal facial recognition technology.

|

| United States Secret Service | The Director of the Secret Service should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 11) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ federal law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Secret Service used non-federal facial recognition systems to support investigative activities, without a mechanism to track which systems employees were using. Consequently, we recommended that the Secret Service implement a mechanism to track what non-federal systems employees use to support investigative activities. In January 2022, the Secret Service issued a requirement to its Office of Investigations and field offices, among other divisions. The agency said that the purpose of the requirement was to capture the use of facial recognition systems owned, contracted, and/or operated by partnering law enforcement agencies during an investigation. For example, the requirement states that all personnel who access a partnering law enforcement agency's system must capture that usage within the Secret Service's Incident Based Reporting (IBR) application. In March 2022, the agency provided us evidence of its IBR application updates, including new fields to capture the facial recognition system's name and owner, and the date the system was used. Furthermore, the agency provided a copy of the IBR user guide instructing staff on how to record the use of facial recognition systems. As a result, this recommendation is closed as implemented.

|

| United States Secret Service | The Director of the Secret Service should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 12) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ federal law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Secret Service used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using, or an assessment of the risks of using such systems. Consequently, we recommended that Secret Service, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy risks. In September 2024, Secret Service published a Privacy Impact Assessment of its usage of non-federal agencies' facial recognition systems. Among other things, the Privacy Impact Assessment provides guidance and procedures to minimize risks associated with use of facial recognition technology, including those related to privacy and accuracy. As a result, this recommendation is closed as implemented.

|

| United States Fish and Wildlife Service | The Director of the U.S. Fish and Wildlife Service should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 13) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ federal law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Fish and Wildlife Service used non-federal facial recognition technology to support investigative activities, without a mechanism to track which systems employees were using. Consequently, we recommended that the U.S. Fish and Wildlife Service implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In July 2021, the agency reported the use of a single non-government technology for all of its facial recognition searches, with access limited to two licensed users. In January 2022, the Assistant Director for Law Enforcement issued a directive providing guidance to the Office of Law Enforcement (OLE) on the use of facial recognition technology for investigative purposes. The directive states that the non-government provider is the only approved system for OLE officers. The directive also requires OLE officers to submit requests to the Wildlife Intelligence Unit for facial recognition searches via an internal case management system. The agency also provided documentation showing the dissemination of this directive to OLE officers. As a result, this recommendation is closed as implemented.

|

| United States Fish and Wildlife Service | The Director of the U.S. Fish and Wildlife Service should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 14) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, U.S. Fish and Wildlife Service (FWS) used non-federal facial recognition technology to support investigative activities, but did not assess the risks of using such systems. Consequently, we recommended to the agency, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. As of August 2023, FWS had deactivated all accounts and discontinued its subscription with the one non-federal facial recognition system provider its law enforcement officers had been using. In August 2024, officials told us the agency has no plans to use non-federal facial recognition technology in the future. As a result, this recommendation is closed as no longer valid.

|

| United States Park Police | The Chief of the U.S. Park Police should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 15) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Park Police used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended that Park Police implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In June 2023, Park Police issued a policy stating that all Park Police personnel may only use non-federal facial recognition technology if the Commander of Criminal Investigations assesses the risk of the system, and provides the assessment in writing to the Chief of Police for final approval. The policy directs the Audits and Inspection Unit to maintain copies of the assessments for tracking purposes. As a result, the agency will have better visibility into the facial recognition systems its employees use, and be better positioned to assess the risks of technologies it uses, to support criminal investigations.

|

| United States Park Police | The Chief of the U.S. Park Police should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 16) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, U.S. Park Police used non-federal facial recognition technology to support investigative activities, but did not assess the risks of using such systems. Consequently, we recommended that the agency, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. As of May 2024, officials said that the Department of Interior is planning to create departmental guidance about usage of facial recognition technology. In the meantime, officials said the Department recommended a moratorium on usage of the technology, and received assurances that no law enforcement components are using the technology. In September 2024, officials told us Park Police has no plans to use non-federal facial recognition technology. As a result, this recommendation is closed as no longer valid.

|

| Bureau of Diplomatic Security | The Assistant Secretary of the Bureau of Diplomatic Security should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 17) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the Department of State Bureau of Diplomatic Security used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended the agency implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In August 2025, the agency issued a policy stating that personnel may use facial recognition technology upon review by the Office of the Chief Technology Officer. The policy states the Chief Technology Officer will assess the technology, determine whether to authorize its use, and maintain a record of the facial recognition technology that has been reviewed. As a result, the agency will have better visibility into the facial recognition systems its employees use, and be better positioned to assess the risks of technologies it uses, to support criminal investigations.

|

| Bureau of Diplomatic Security | The Assistant Secretary of the Bureau of Diplomatic Security should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 18) |

In August 2025, Department of State Bureau of Diplomatic Security issued a policy stating personnel may use facial recognition technology upon review by the Office of the Chief Technology Officer. The policy states that the Chief Technology Officer will assess the technology, determine whether to authorize its use, and maintain a record of the facial recognition technology that has been reviewed. We continue to monitor to determine whether the tracking mechanism will capture facial recognition systems, and whether the agency assesses the privacy and accuracy related risks of systems that the agency uses. As of August 2025, this recommendation remains open.

|

| Food and Drug Administration | The Assistant Commissioner of the Food and Drug Administration's Office of Criminal Investigations should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 19) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, Food and Drug Administration's (FDA) Office of Criminal Investigations (OCI) used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended that FDA implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In December 2021, the U.S. Department of Health and Human Services reported that OCI had deployed a module in its primary system of records for investigations to track employee use of facial recognition technology. The agency also said it notified OCI employees of the requirement to use the module to document information about their use of facial recognition technology in an investigation. The notice informed employees that they would be required to include the system name, owner of the system, and how the system supported OCI's mission. As a result, this recommendation is closed as implemented.

|

| Food and Drug Administration | The Assistant Commissioner of the Food and Drug Administration's Office of Criminal Investigations should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 20) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ federal law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the Food and Drug Administration's (FDA) Office of Criminal Investigations (OCI) used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using, or an assessment of the risks of using such systems. Consequently, we recommended that FDA, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. OCI assessed the risks associated with law enforcement use of facial recognition technology, which informed a facial recognition technology policy OCI issued in October 2022. Among other things, the policy provides guidance and procedures to minimize risks associated with use of facial recognition technology, including those related to privacy and accuracy. As a result, this recommendation is closed as implemented.

|

| Internal Revenue Service | The Chief of the Internal Revenue Service's Criminal Investigation Division should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 21) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the Internal Revenue Service's Criminal Investigation Division used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended that Criminal Investigation Division implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In December 2022, the Division notified personnel that all uses of facial recognition technology must be approved by the director of Cyber and Forensic Services. The memorandum noted that the director will maintain requests for use of facial recognition technology, as well as their approval or denial, to allow tracking for auditing purposes. As a result, this recommendation is closed as implemented.

|

| Internal Revenue Service | The Chief of the Internal Revenue Service's Criminal Investigation Division should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 22) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, Internal Revenue Service Criminal Investigation Division used non-federal facial recognition technology to support investigative activities, but did not assess the risks of using such systems. Consequently, we recommended to the agency, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. In December 2022, Criminal Investigation Division created a mechanism for approving and tracking use of facial recognition technology. In May 2024, officials told us that the Division has not used non-federal facial recognition technology since the tracking mechanism was implemented and has no plans to use the technology in the future. As a result, this recommendation is closed as no longer valid.

|

| Inspection Service | The Chief Postal Inspector of the U.S. Postal Inspection Service should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 23) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Postal Inspection Service (USPIS) used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended that USPIS implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In October 2021, UPSIS notified inspectors that they are required to enter usage of external organizations' facial recognition tools into a tracking tool called InSite. Within the tool, employees are required to enter the name of the facial recognition system, date used, employee's name, and investigative case number. As a result, this recommendation is closed as implemented.

|

| Inspection Service | The Chief Postal Inspector of the U.S. Postal Inspection Service should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 24) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ federal law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Postal Inspection Service used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using, or assess the risks of using such systems. Consequently, we recommended the agency, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. The agency assessed the risks associated with law enforcement use of non-federal facial recognition technology, and provided the results of its assessment to us in March 2023. Among other things, the agency identified risks associated with use of the technology, and has taken some steps to help mitigate these risks, such as using encrypted emails to transfer photos. As a result, this recommendation is closed as implemented.

|

| U.S. Capitol Police | The Chief of Police, U.S. Capitol Police, should implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. (Recommendation 25) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Capitol Police used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using. Consequently, we recommended that the Capitol Police implement a mechanism to track what non-federal systems with facial recognition technology are used by employees to support investigative activities. In June 2023, the agency issued a Standard Operation Procedure to its Investigations Division employees. The purpose of the Standard Operation Procedure is to establish a uniform process for the request and utilization of facial recognition systems, including non-federal systems, for investigations. It also establishes a tracking mechanism. Specifically, according to the Standard Operating Procedure, if an employee receives approval to use a system, they are required to record certain details (e.g., a copy of the image intended for submission) in the Capitol Police record management system for tracking purposes. As a result, this recommendation is closed as implemented.

|

| U.S. Capitol Police | The Chief of Police, U.S. Capitol Police, should, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. (Recommendation 26) |

In June 2021, we reported on the use of facial recognition technology by agencies that employ law enforcement officers (GAO-21-518). We found that between April 2018 and March 2020, the U.S. Capitol Police used non-federal facial recognition technology to support investigative activities, but did not have a mechanism to track which systems employees were using, or assess the risks of using such systems. Consequently, we recommended to the agency, after implementing a mechanism to track non-federal systems, assess the risks of using such systems, including privacy and accuracy-related risks. As of August 2024, agency officials told us Capitol Police no longer uses non-federal facial recognition systems. Officials said the agency's tracking mechanism had not captured any usage of non-federal facial recognition technology, and that the agency has no plans to use non-federal facial recognition technology to support investigations in the future. In the event that Capitol Police uses non-federal facial recognition systems in the future, officials said the agency will assess the system for risks. As a result, this recommendation is closed as no longer valid.

|