Science & Tech Spotlight: Generative AI in Health Care

Highlights

Why This Matters

The health care sector faces many challenges, such as high costs, long timelines for drug development, and provider burnout. Generative artificial intelligence (AI) is an emerging tool that may help address these and other challenges.

Key Takeaways

- Several companies are developing generative AI tools to speed up drug development and clinical trials, improve medical imaging, and reduce administrative burdens.

- However, most tools remain largely untested in real-life settings, and generative AI can create erroneous outputs.

- The technology raises questions for policymakers about how to balance potential benefits with protections for patients and their data.

The Technology

What is it? Generative AI is a machine learning technology that can create digital content such as text, images, audio, or video. Unlike other forms of AI, it can generate novel content. For example, using existing chemical and biological data, it can create new molecular structures with desired characteristics for use in drug development.

How does it work? Like other forms of machine learning,generative AI uses algorithms that are “trained” on very large datasets—ranging from millions to trillions of data points. Training is the iterative process of feeding data through the model until it can perform a specific task (see GAO-24-106946).

In the health care sector, generative AI models generally train on a large volume of curated health care data, which could include clinical notes, clinical trial data, and medical images.

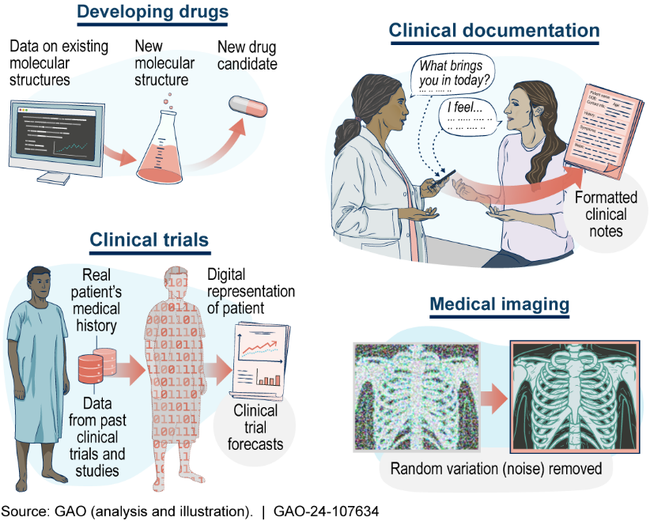

Figure 1. Examples of Potential Uses of Generative AI in Health Care

Opportunities

Generative AI has a wide range of emerging uses in health care, which vary in maturity. Some examples include:

- Developing drugs. Generative AI can design new drug candidates, which could accelerate development timelines by replacing the conventional manual design process. Like conventionally designed drug candidates, generative AI-designed drugs must be validated and interpreted by researchers, and safety and efficacy must be demonstrated in clinical trials. As of December 2023, around 70 drugs developed with some assistance from generative AI were in clinical trials with patients, though none are on the market, according to recent studies.

- Clinical documentation. Generative AI can address a range of needs related to clinical documentation. Today, models can draft clinical notes in specified formats using a transcription of doctor-patient interactions. Researchers are also developing models that could compile and verify information in electronic health records to obtain insurance preauthorization. Such tools might help reduce administrative burden and burnout in medical staff.

- Clinical trials. Some companies offer models that create digital representations of patients based on data from past clinical trials and observational studies. The generative AI models forecast how each participant’s health would likely progress during the trial if that participant did not receive the treatment being tested. Such forecasts might provide greater confidence in the results of small trials.

- Medical imaging. Researchers are developing generative AI models to enhance the quality of medical images, such as MRI scans. Models can be trained to identify noise—random variation of brightness or color—and generate a clean image, potentially improving diagnostic accuracy.

Challenges

Users of generative AI may not be able to tell why it produces a certain output, which could make it harder for them to judge the reliability of that output. Generative AI presents other notable challenges in health care, including:

- False information. Generative AI models can generate plausible but inaccurate outputs, often called “hallucinations,” which may be dangerous in a medical setting. For example, imaging models can create fake lesions or blur important details, and documentation tools might incorrectly summarize symptoms. AI is not a substitute for professional medical expertise.

- Data privacy. Health care data, such as medical images, are often sensitive and subject to privacy laws. Their collection and use might expose patients’ information.

- Data availability. Generative AI models typically require a very large volume of training data to produce accurate and reliable outputs. But health care data can be fragmented across multiple systems and formats, and may be low-quality or inconsistent. For drug development, there are few public databases and proprietary data are often inaccessible, making it difficult to obtain sufficient data.

- Bias. If data used to train generative AI models are not representative, outputs may be biased and underperform for underrepresented groups.

Policy Context and Questions

- What safeguards are needed to address false information and bias in generative AI in health care settings?

- What steps should be taken to protect patient data used to train generative AI models from unauthorized disclosures and comply with relevant privacy laws?

- What steps should be taken to improve access to health care datasets for training generative AI models?

Selected GAO Works

Artificial Intelligence: Generative AI Technologies and Their Commercial Applications, GAO-24-106946.

Technology Assessment: Artificial Intelligence in Health Care (Diagnostics), GAO-22-104629.

Selected References

Sai et al., "Generative AI for Transformative Healthcare: A Comprehensive Study of Emerging Models, Applications, Case Studies, and Limitations," in IEEE Access, vol. 12, pp. 31078-31106, 2024.

For more information, contact Brian Bothwell at (202) 512-6888 or bothwellb@gao.gov.