Artificial Intelligence: Key Practices to Help Ensure Accountability in Federal Use

Fast Facts

Artificial intelligence is a rapidly evolving technology that presents operational and workforce challenges for the federal government.

We testified on our key practices for the responsible use of AI in federal agencies. The practices are organized around four principles—governance, data, performance, and monitoring. For example, "monitoring" calls for agencies to ensure AI systems remain reliable and relevant over time.

Also, there is a severe shortage of federal staff with AI expertise. We previously reported on experts' opinions about establishing a new digital services academy—similar to the military academies—to train future workers.

Highlights

What GAO Found

Artificial intelligence (AI) is evolving at a rapid pace and the federal government cannot afford to be reactive to its complexities, risks, and societal consequences. Federal guidance has focused on ensuring AI is responsible, equitable, traceable, reliable, and governable. Third-party assessments and audits are important to achieving these goals. However, a critical mass of workforce expertise is needed to enable federal agencies to accelerate the delivery and adoption of AI.

Participants in an October 2021 roundtable convened by GAO discussed agencies' needs for digital services staff, the types of work that a more technical workforce could execute in areas such as artificial intelligence, and challenges associated with current hiring methods. They noted such staff would require a variety of digital and government-related skills. Participants also discussed challenges associated with existing policies, infrastructure, laws, and regulations that may hinder agency recruitment and retention of digital services staff.

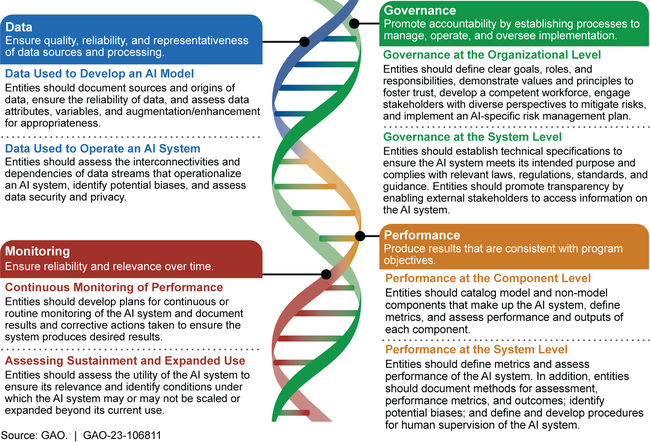

During a September 2020 Comptroller General Forum on AI, experts discussed approaches to ensure federal workers have the skills and expertise needed for AI implementation. Experts also discussed how principles and frameworks on the use of AI can be operationalized into practices for managers and supervisors of these systems, as well as third-party assessors. Following the forum, GAO developed an AI Accountability Framework of key practices to help ensure responsible AI use by federal agencies and other entities involved in AI systems. The Framework is organized around four complementary principles: governance, data, performance, and monitoring.

Artificial Intelligence (AI) Accountability Framework

Why GAO Did This Study

To help managers ensure accountability and the responsible use of AI in government programs and processes, GAO has developed an AI Accountability Framework. Separately, GAO has identified mission-critical gaps in federal workforce skills and expertise in science and technology as high-risk areas since 2001.

This testimony summarizes two related reports—GAO-22-105388 and GAO-21-519SP. The first report addresses the digital skills needed to modernize the federal government. The second report describes discussions by experts on the types of risks and challenges in applying AI systems in the public sector.

To develop the June 2021 AI Framework, GAO convened a Comptroller General Forum in September 2020 with AI experts from across the federal government, industry, and nonprofit sectors. The Framework was informed by an extensive literature review, and the key practices were independently validated by program officials and subject matter experts.

For the November 2021 report on digital workforce skills, GAO convened a roundtable discussion in October 2021 comprised of chief technology officers, chief data officers, and chief information officers, among others. Participants discussed ways to develop a dedicated talent pool to help meet the federal government's needs for digital expertise.

For more information, contact Taka Ariga at (202) 512-6888 or arigat@gao.gov.